Data Science

Data science merges computer science, statistics, and domain expertise to glean insights from data, drive innovation, and solve complex problems. This interdisciplinary field empowers businesses to make decisions backed by quantitative data analysis and predictive analytics.

Why is it transformative? It offers the tools to turn vast amounts of data into actionable insights, fostering data-driven decision-making across all sectors of the economy.

Our Focus: This section delves into the methodologies, technologies, and real-world applications of data science, highlighting its impact on shaping future trends and business strategies.

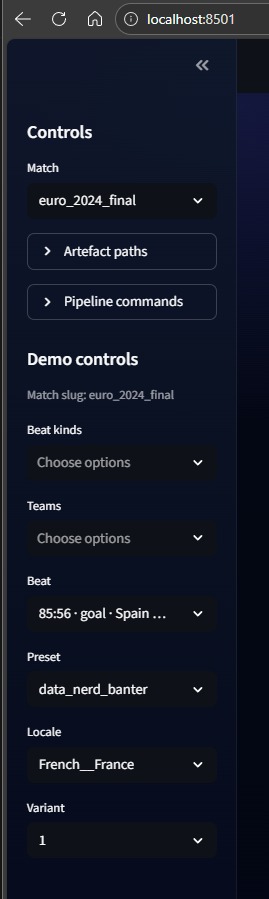

Localisation is not translation - context cartridges for international football

How we constrain generation so the same moment stays factual while sounding native in every market.

Localisation is not translation - context cartridges for international football

1. The failure mode: generic football priors

Hook: translation changes words; localisation changes meaning. Models trained on football internet lore will happily add club nicknames or unrelated national teams unless you constrain them. That is a deal-breaker for an international final.

2. The fix: a context cartridge per locale

Each locale ships with a compact, auditable cartridge that sets boundaries:

- Permitted national-team references and nicknames.

- Tournament-specific phrasing (Euros, final, extra-time).

- Platform-appropriate hashtags and tone hints.

- Explicit exclusions (no club teams, no unrelated nations).

3. Arabic (Saudi) as a first-class locale

Adding Arabic forces discipline: you cannot lean on English slang, and you must use culturally native football phrasing. The cartridge keeps this localisation tethered to the same beats/state facts.

4. QA becomes visible (and fixable)

If you cannot see failure quickly, you will not fix it. We expose QA signals directly beside the output:

- No local references used (cartridge ignored).

- Hashtag not whitelisted (drift).

- Low-quality heuristic score (fast triage, not model grading).

5. Why this matters commercially

- Content that sounds native performs better.

- Brand teams approve constrained systems faster.

- Sponsors get contextual placement, not random highlight spam.

6. Tech stack (current demo)

- JSONL context cartridges per locale with explicit whitelists.

- Beats/state anchoring to prevent invented match facts.

- Streamlit UI for inspection and fast iteration.

7. Call to action

If you work on international sports content and want to compare translation versus true localisation on your fixtures, I am happy to share notes.

Related reading

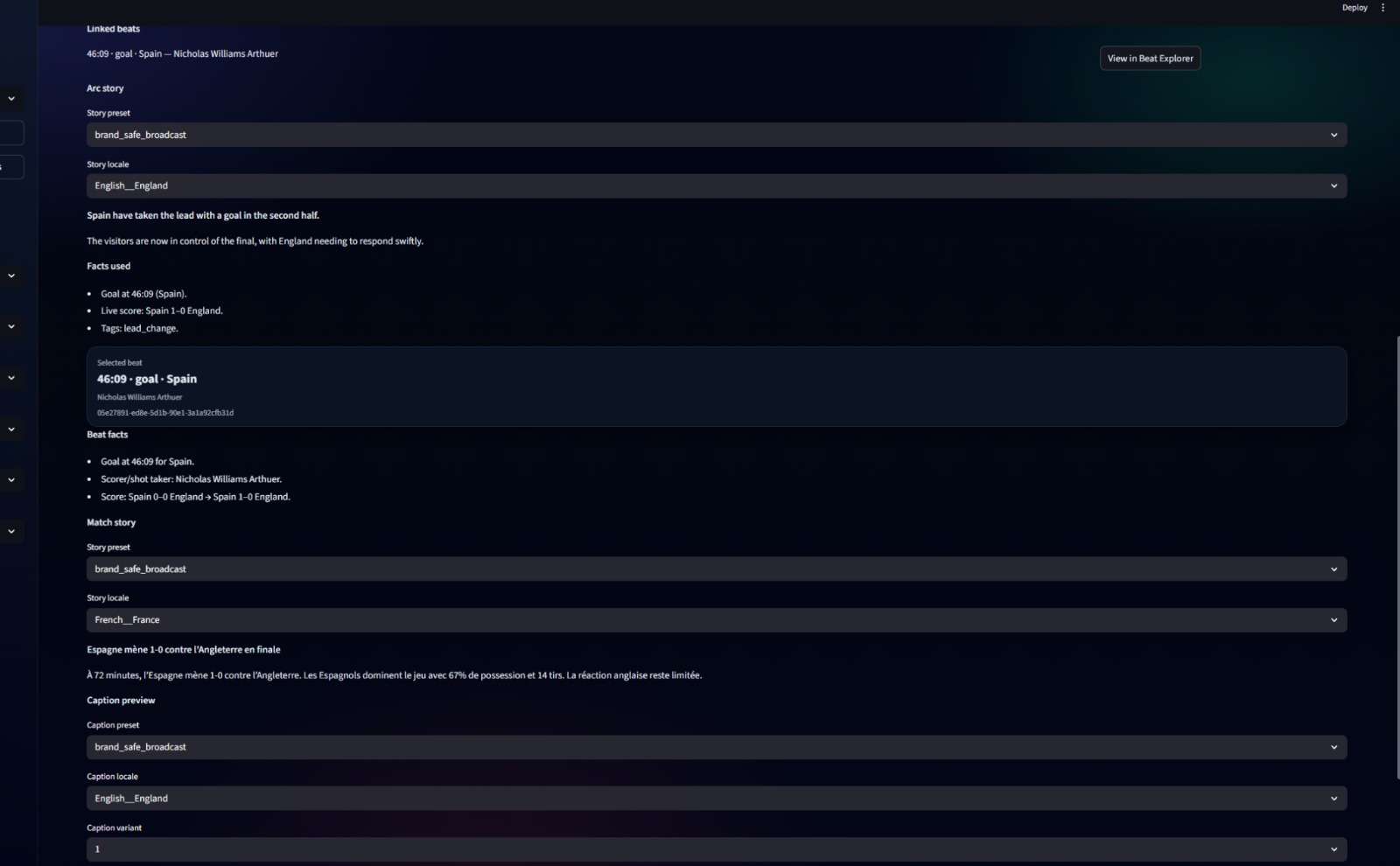

Beyond WAPE - Building a forecast quality framework

We defined auditable quality axes, then wired a W&B thin-slice to compare models safely.

Beyond WAPE — building a forecast quality framework (and wiring it into W&B)

1. The problem

Hook: a forecast can look good on headline error and still be operationally dangerous.

Most teams start with a single metric (often WAPE/MAPE). That’s sensible… until you ship a model that:

- Under-forecasts peaks (bad staffing outcomes).

- Over-forecasts baseline (waste).

- Whipsaws every day as new data arrives (no trust).

- Looks fine on average but fails specific partners/sites (quiet failure).

So the goal became:

Define forecast quality as a small set of auditable axes, then make it easy to compare models quickly.

2. A practical definition of quality (not a metric zoo)

We started with a one-pager: forecast quality as a handful of scores, each tied to a failure mode.

Core accuracy

- WAPE (weighted absolute percentage error) stays the headline.

For daily sales-style forecasts:

AE_t = |y_t - ŷ_t|WAPE = Σ AE_t / Σ |y_t|

Then define an accuracy score that increases with quality: AccuracyScore = 1 - WAPE.

Bias balance

Error magnitude alone doesn’t capture systematic skew.

MPE = mean((ŷ - y) / y)BiasScore = 1 - |MPE|(bounded/capped in practice)

Stability / churn

A model that changes its mind every day can be operationally unusable. We measure how much the forecast shifts between successive runs for the same horizon, then penalise large revisions.

Tail robustness

Average error hides “this explodes in edge cases”. We look at upper quantiles of WAPE across sites/weeks (e.g., 90th percentile) and score down fragile models.

Peaks and events

Peaks are where the money (and pain) is. We evaluate peak intervals/days separately, and optionally evaluate tagged event windows (promos, holidays, etc.).

3. Golden set first: fast iteration without a platform

To avoid analysis paralysis, we started with a thin slice:

- A small, representative subset (sites × weeks × horizons).

- Consistent splits and aggregation logic.

- Enough plots/tables to see failure modes.

This lets you answer “does model B beat model A?” without waiting for a production pipeline.

4. Tooling: split the audience, reduce the burden

We made a pragmatic call:

- DS/experimentation view → Weights & Biases.

- Commercial scorecards → warehouse + Looker.

W&B is excellent for comparing runs and logging artefacts quickly. Commercial stakeholders want a stable, curated view.

5. The W&B thin-slice implementation

- Compute the metric set across site-week, partner-week, and partner summary levels.

- Log high-signal visualisations (partner × metric heatmap, weekly trendlines, peak/event bars).

- Make comparisons robust with medians and median-of-medians so one chaotic site doesn’t dominate.

The point isn’t a perfect dashboard. It’s auditable, repeatable comparison with minimal overhead.

6. Roadmap: from prototype to daily monitoring

- Baseline monitoring — stable daily/weekly slices with alerts for obvious breakage.

- Benchmarking & iteration — compare models/horizons on the golden set with bias/stability guardrails.

- Automation — scheduled evaluation jobs; outputs land in the warehouse.

- Governance — versioned metric definitions and “what changed and why” per release.

7. What I like about this approach

- It keeps WAPE as the commercial headline, but refuses to be naive about it.

- It makes “safe to ship” explicit: bias, churn, robustness, peaks.

- It avoids building a bespoke evaluation product until there is undeniable need.

- It produces artefacts stakeholders can argue with (tables + definitions), not vibes.

8. Tech stack

- Python (Pandas / NumPy)

- Plotly for quick comparatives

- W&B for run tracking + artefact logging

- Designed to export summary metrics to a warehouse for long-term reporting

9. Call to action

If you’re forecasting demand and want a lean evaluation framework teams can maintain, I’m happy to share the pattern — especially the “thin slice first” discipline that stops this becoming a third project.

Related reading

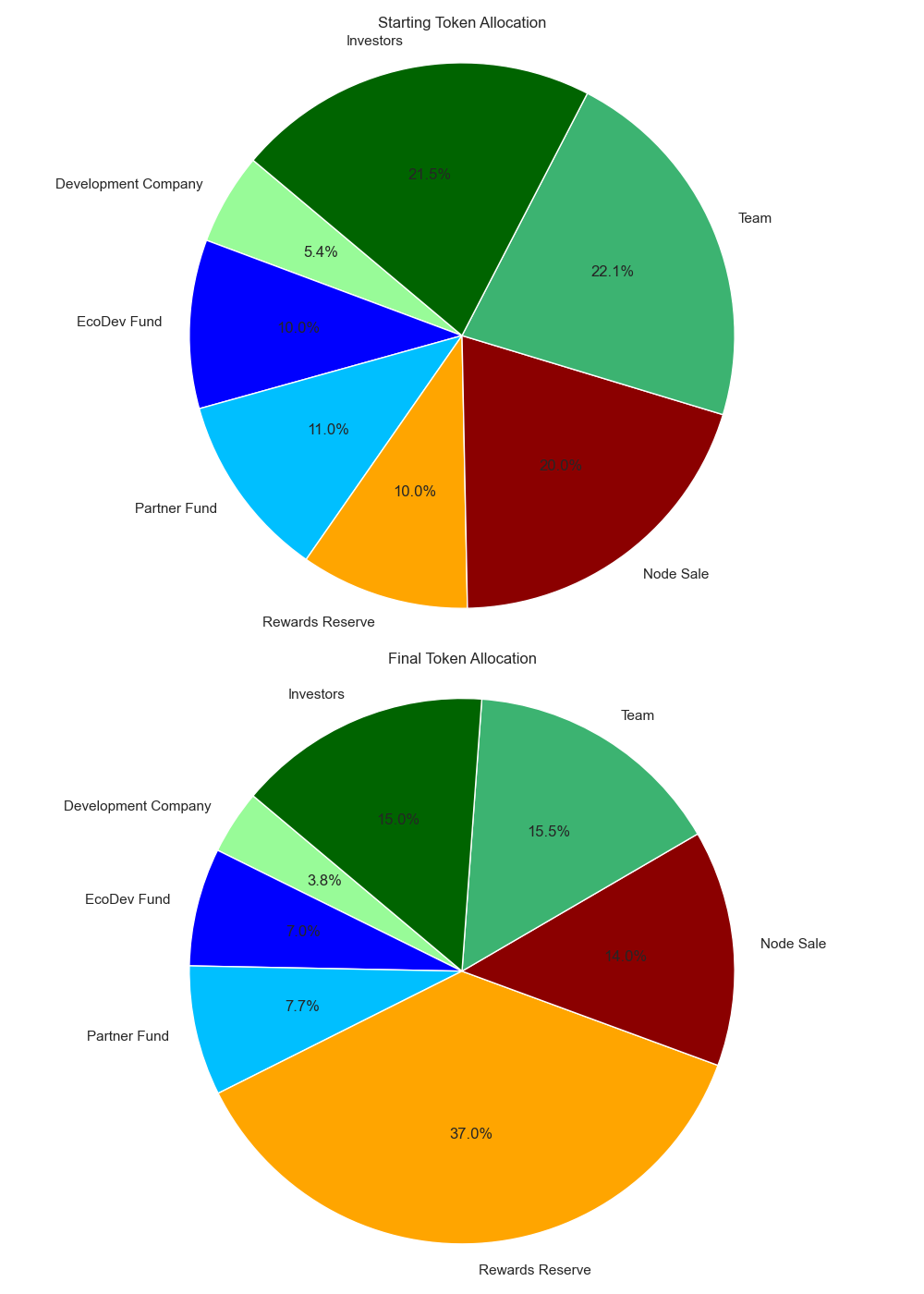

Post-Launch Lessons: Debugging Smart-Contract Rewards & the Year-1 Data Roadmap

What broke, how we fixed it, and where the data team is heading over the next twelve months.

Post-Launch Lessons: Debugging Smart-Contract Rewards & Writing the Year-1 Data Roadmap

1. Launch week: welcome to main-net

Main-net went live on 3 July 2025. Within two hours engineers spotted reward deltas between the on-chain view and the Delta Lake mirror. The next nine days became an impromptu bug-hunt sprint.

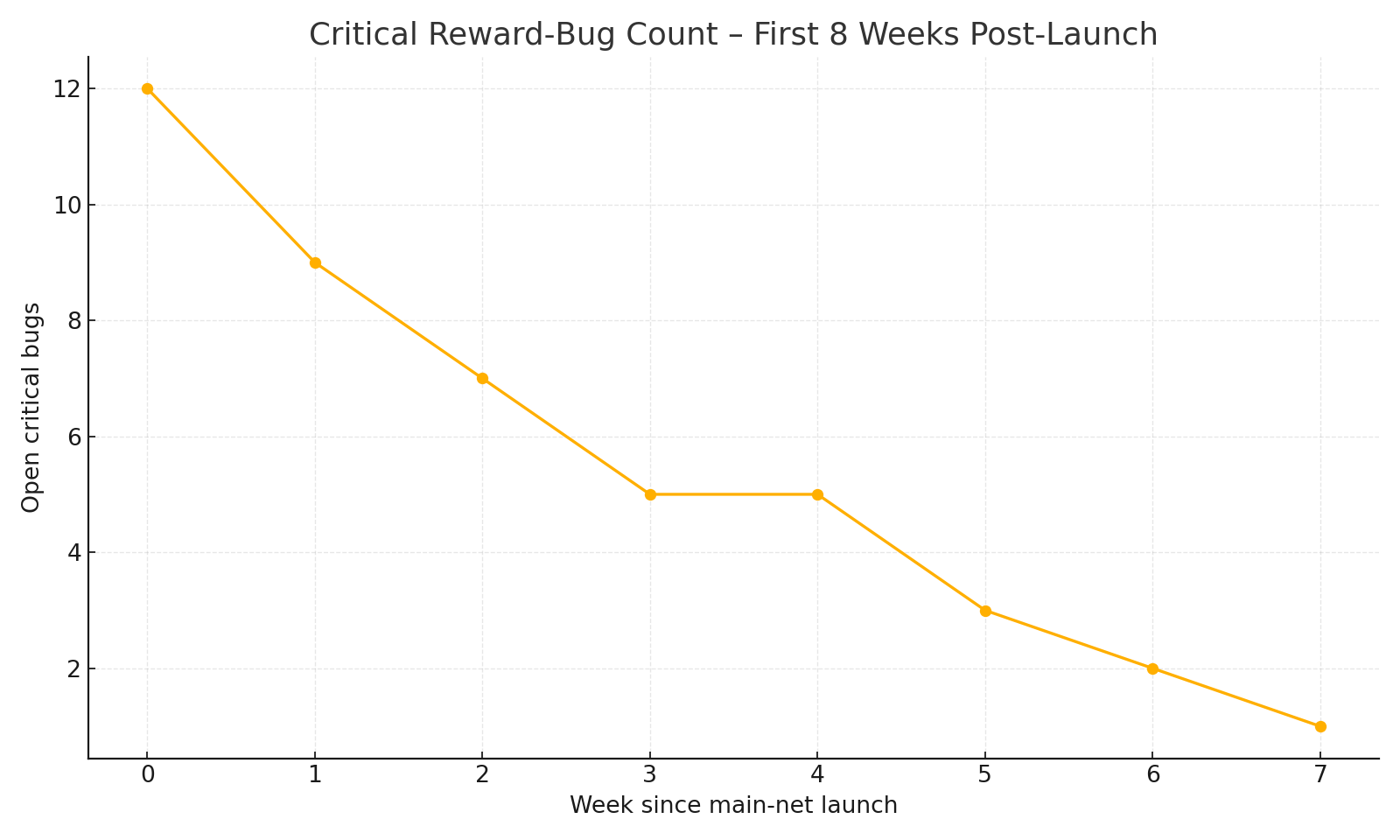

2. Critical bug burn-down

RewardCorrector patch shipped.Key issues discovered

- Ghost era payouts - a race condition between the era toggle and node-claim transactions. Fixed by enforcing a single-writer pattern in the contract.

- Delegator skew - edge-case where zero-stake delegators inherited stale snapshots. Added guard that skips zero shares.

- Precision bleed - rounding error on high-precision

DECIMAL(38,18)paths. Moved to integer math on-chain.

3. What worked and what didn't

What worked: on-chain + off-chain diff tests running every 30 minutes caught regressions instantly. What didn't: relying on log sampling instead of explicit invariant checks meant we needed manual triage for two full weekends.

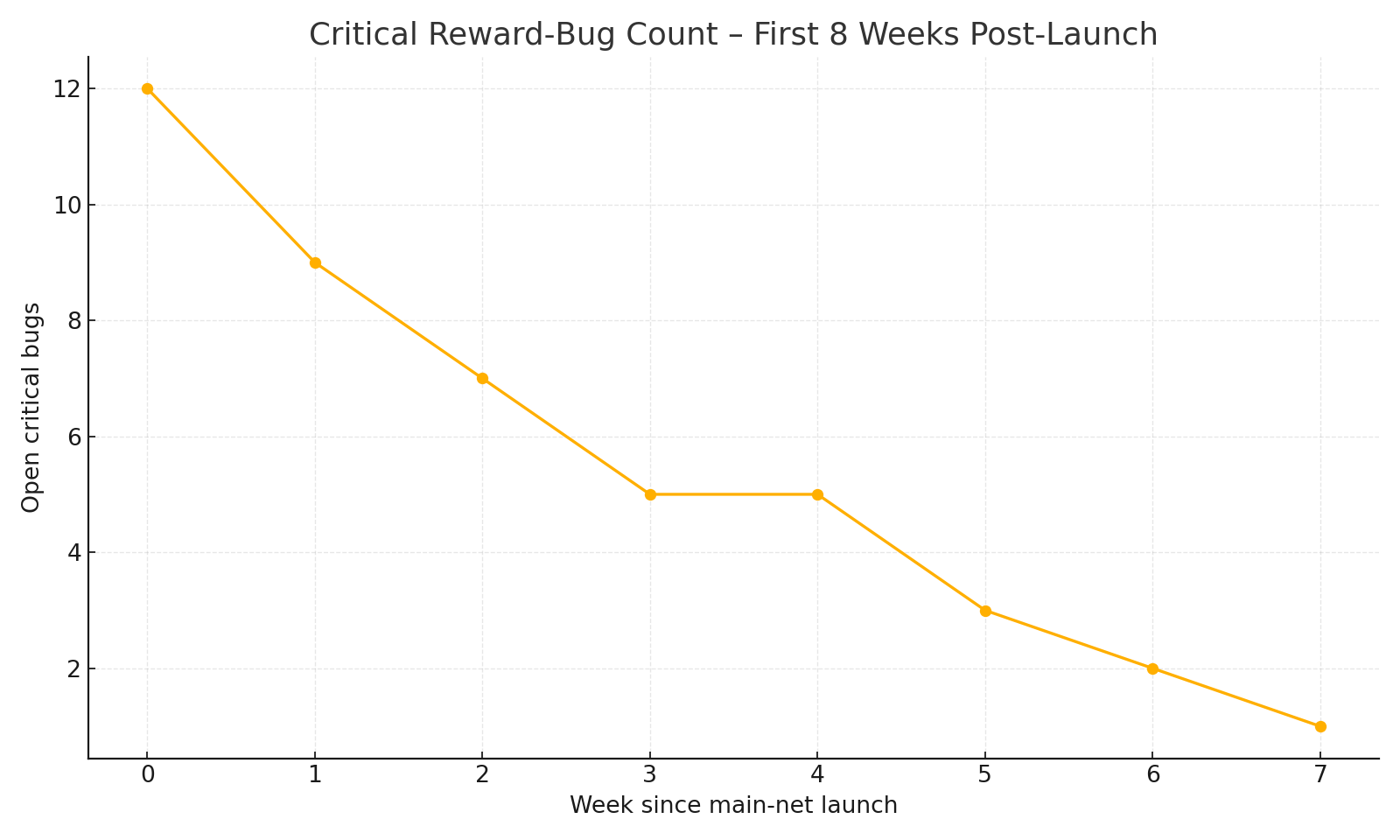

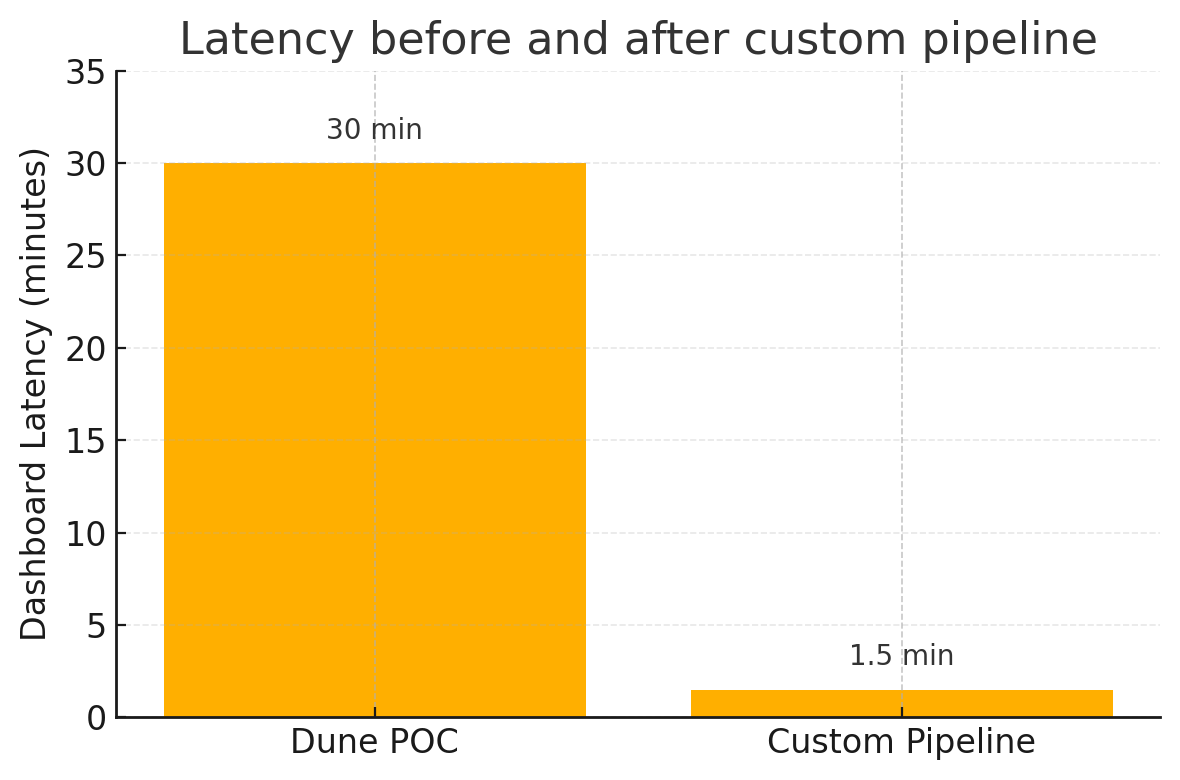

4. Year-1 Data Roadmap (high-level)

The full roadmap deck is internal, but at a glance the next four quarters split into three big work-streams:

- Data Engineering - migrate raw feeds to serverless pipelines, decommission legacy notebooks.

- BI & Visualisation - thin Tableau layer, expose a public

/metricsAPI and embed live charts in docs. - Research - forecasting token supply vs demand, collateral stress tests and an LLM-driven anomaly detector.

5. Lessons for the next twelve months

- Write invariants first. Unit tests are not enough once gas costs force micro-optimisations.

- Join on-chain and off-chain early. Divergence is easier to spot when everything meets in the same Delta table.

- Separate dashboards by audience. Finance cares about USD, operators care about tokens per PB, governance cares about tail risk.

6. Call to action

Have an idea for a data-science collaboration or want to stress-test some reward maths? Drop me a line via the contact form or reach out on LinkedIn.

Related reading

Beyond Dashboards - Mining Dune, Then Building Our Own

Dune was a fast on-chain proof-of-concept, but our KPIs lived off-chain too. Here is why we stitched Python scripts and Tableau into the pipeline.

Beyond Dashboards - Mining Dune, Then Building Our Own

Introduction

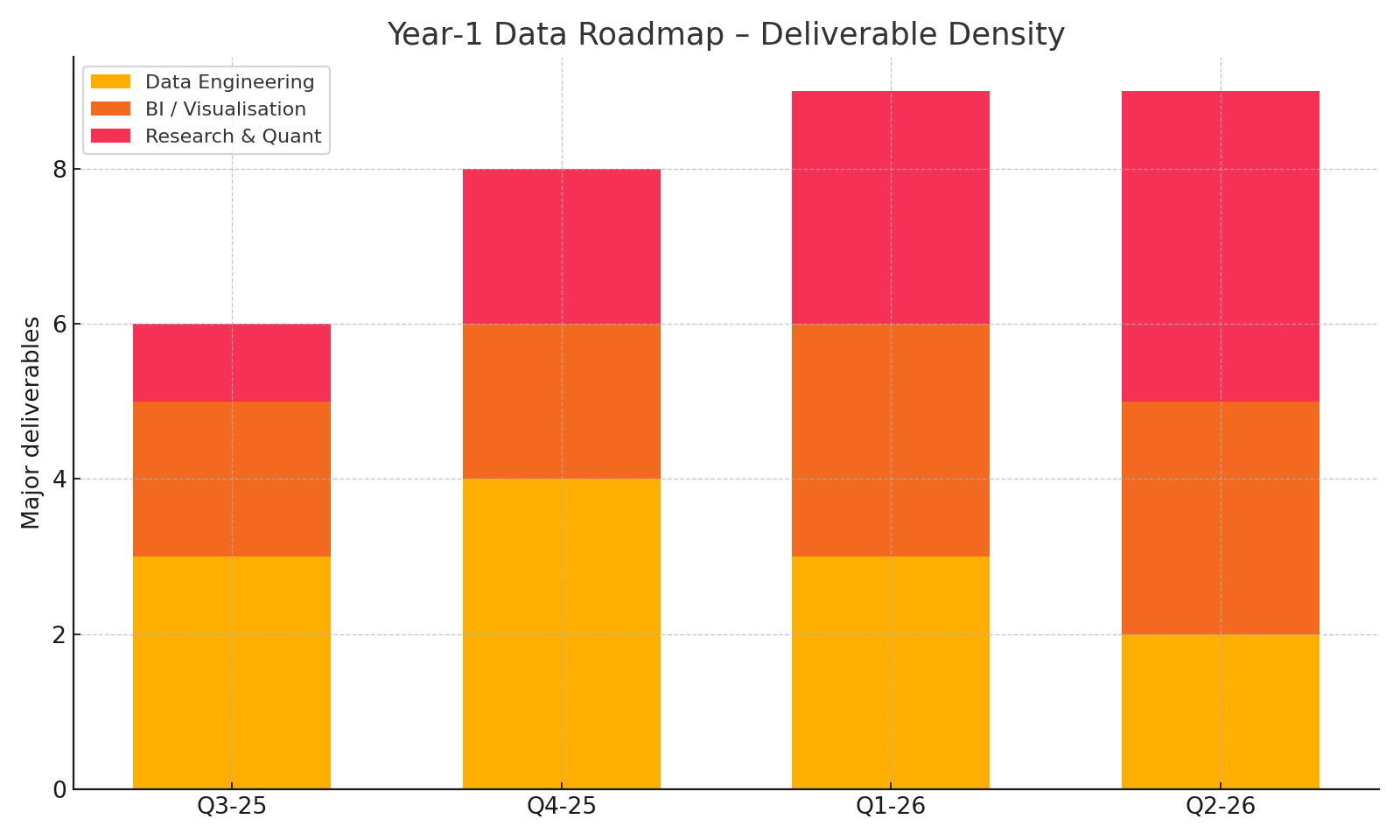

Hook: Dune Analytics is unbeatable for getting token stats in minutes. But when investors ask how many SaaS trials converted last week, on-chain SQL alone will not cut it.

Feasibility study, not production

Important note: none of this went live. January 2024 was a feasibility sprint only. Our mandate was to scope what a mixed on-chain and off-chain BI stack would cost and whether Dune alone could do the job.

The PoC generated three dashboards and a handful of metrics, but it ran on sample data and lasted one week. The lessons still shaped the real pipeline we shipped six months later.

Rapid Dune prototype

We spun up a free Dune workspace, forked ethereum.token_transfers, and built a token-supply panel via the Python snippet below.

# abridged from test.py

from dune_client import DuneClient

import pandas as pd

dune = DuneClient(api_key="<redacted>")

rows = dune.get_latest_result(4550775).get_rows()

print(pd.DataFrame(rows).head())

Within an hour we had circulating-supply, fee-burn and node-reward views. The trade-off was zero visibility into SaaS funnel data or S3 utilisation.

Why Dune fell short

- Read-only – no way to push custom tables without a hefty sponsorship.

- 30-minute lag made real-time incident alerts impossible.

- Cost model jumps sharply once you exceed free row quotas.

Architecture we shipped

We pulled on-chain events via ethereum.transactions GBQ and our own Alchemy websocket feed, wrote to Postgres, blended off-chain CSVs with Pandas, and surfaced the lot in Tableau Cloud.

Tech stack

- Python 3.11 - Pandas - NumPy - Matplotlib - Seaborn

- Postgres 15 hosted on AWS RDS

- Tableau Cloud dashboards with live Postgres connections

- Docker-Compose for local dev; GitLab CI for deploys

Outcome

The mixed pipeline slashed dashboard latency from 30 minutes to under 90 seconds and let product see Web2 funnel data next to on-chain supply curves.

Useful links

Related reading

Want the full ER diagram or a live demo? Reach out via the contact form or ping me on LinkedIn.

Slash & Secure - Designing Oracle-Node Penalties

Inside ICN's new slashing engine: how mint events, rolling-window uptime and fixed-day penalties keep oracles honest without nuking decentralisation.

Slash & Secure - Designing Oracle-Node Penalties for ICN

Introduction

Hook: Oracles lie, hardware dies - but your network should never go down with them. Slashing is the surgical scalpel that deters bad behaviour while keeping honest operators in the game.

This deep-dive shows how we modelled and stress-tested ICN's oracle-node penalties, combining mint-driven unlock curves with a fixed-day rolling window to strike a balance between deterrence and predictability.

Tech stack

- Python 3.11 / Pandas / NumPy for schedule maths

- Matplotlib and Seaborn for visualisation

- SciPy for optimisation of penalty parameters

- Hardhat + OpenZeppelin for Solidity unit-tests

- Docker Compose + GitHub Actions for nightly Monte-Carlo batches

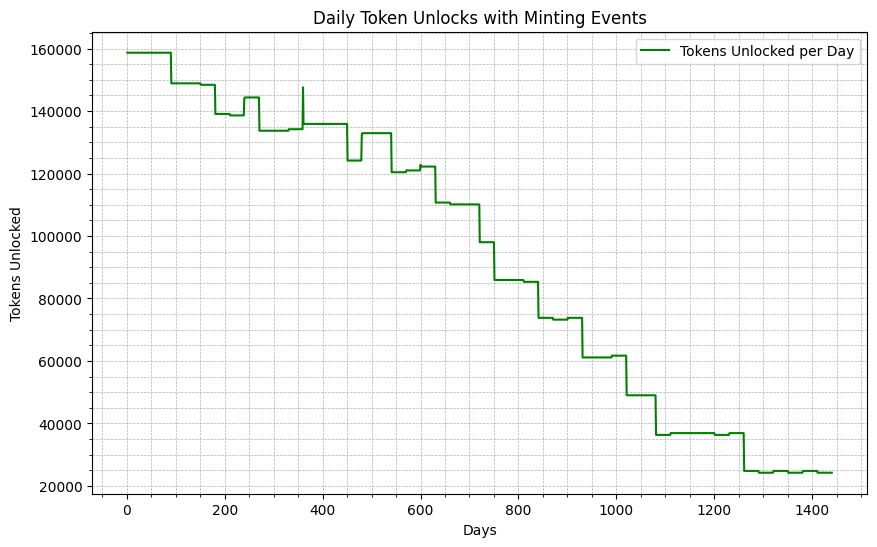

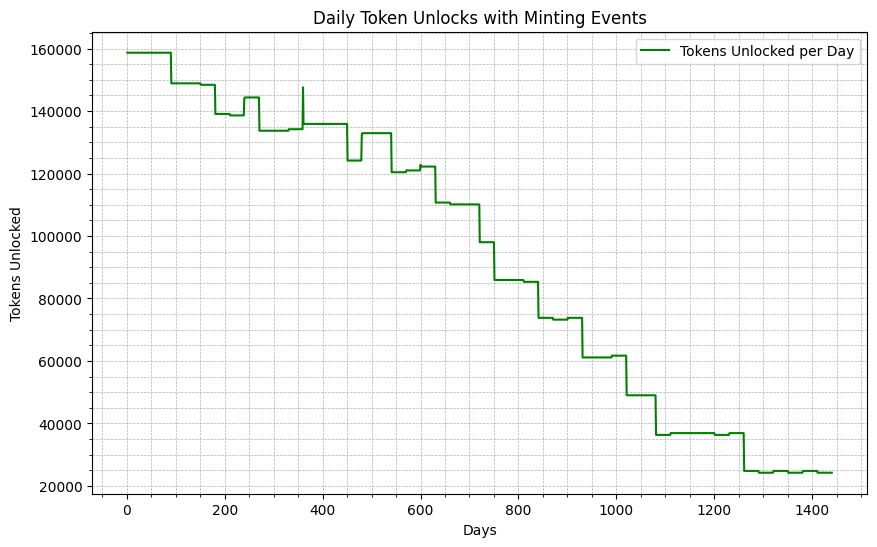

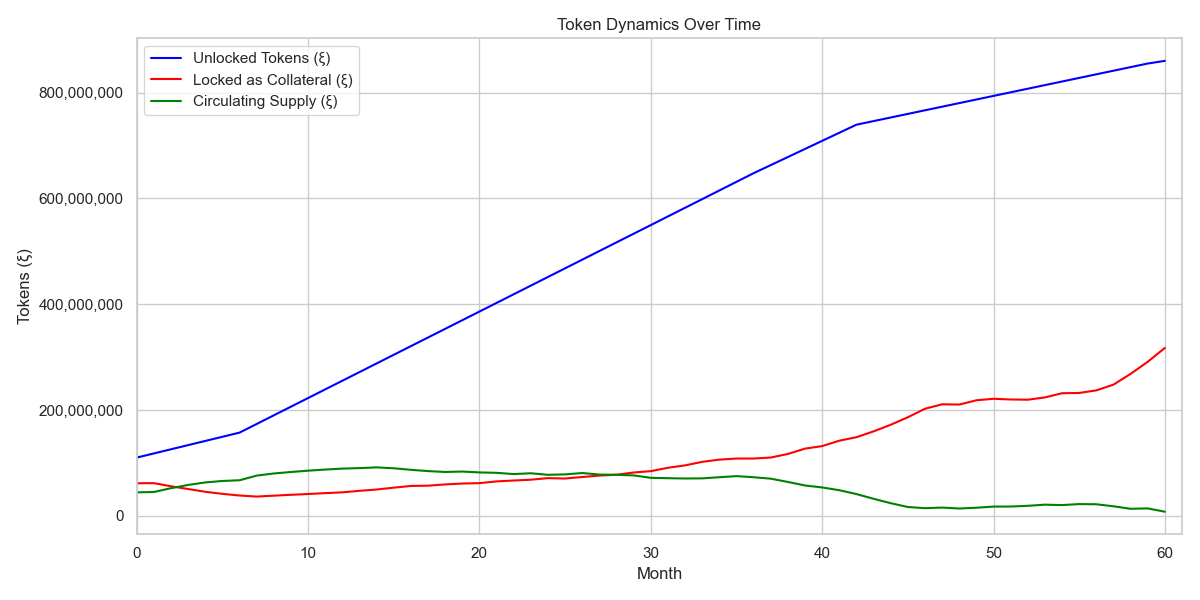

Mint-paced unlock schedule

The baseline unlock curve is paced by the network's monthly mint. Every new mint cycles a tranche of rewards from locked to claimable, front-loading incentives when capital is most expensive.

Notice the stair-step pattern: unlock velocity halves by month 18, aligning emission with projected revenue. This made mint pacing the obvious leash for proportional slashing later on.

Fixed-day penalty model

We opted for a constant 1-day penalty per verified breach, applied inside a 30-day rolling window. Why fixed-day?

- Simplicity for operators - no complex percentage maths.

- Predictable treasury inflow.

- Front-loaded schedule naturally punishes repeat offenders more (each early day unlocks more tokens).

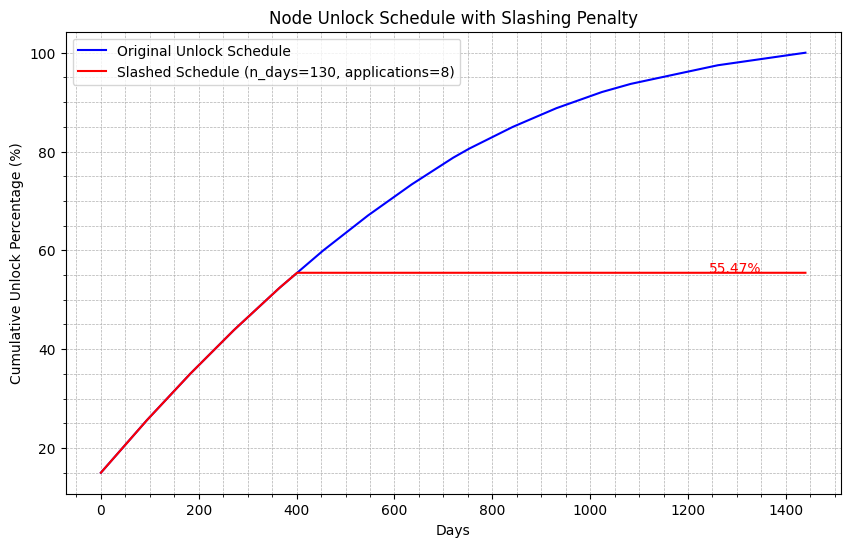

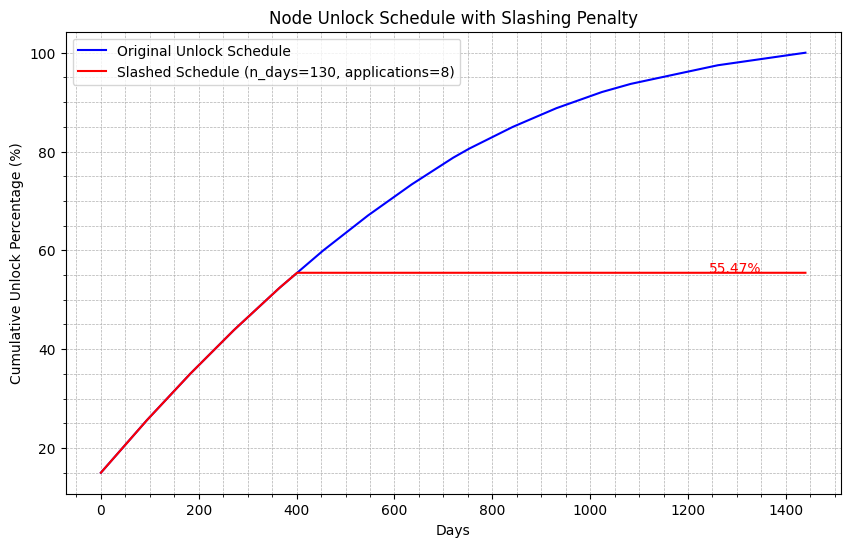

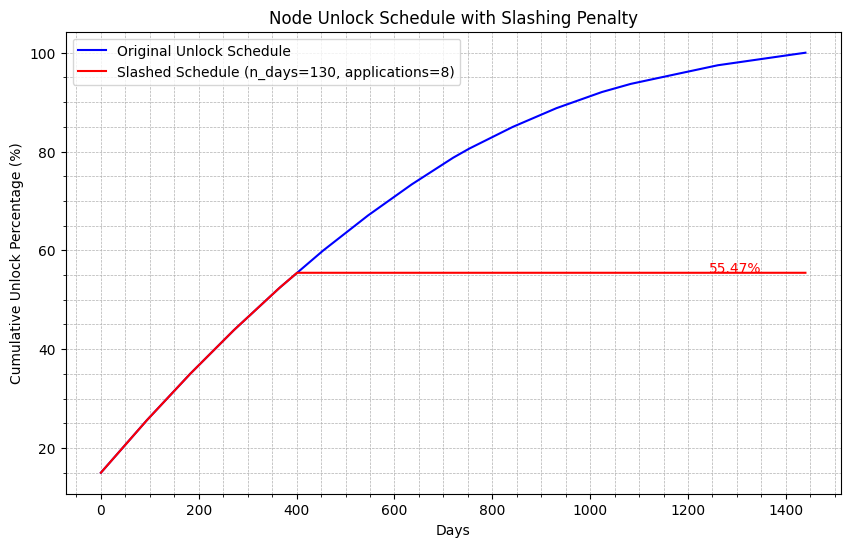

Impact visualised

The red curve shows how the operator's unlock stalls after the eighth breach, freezing at 55 percent while the honest schedule (blue) keeps compounding.

Operational insights

- No compounding: A flat 1-day cut prevents runaway slashing yet still bites early due to the curve's convexity.

- Rolling window recovery: Operators who stabilise uptime for 30 days stop the bleeding - carrot following stick.

- Treasury boost: Redirected tokens flow into the Reward Reserve, seeding future growth incentives.

Useful links

- Ethereum - PoS slashing design

- Chainlink - What is a blockchain oracle?

- Vitalik - Incentive design patterns

- September collateral stress-test - why we need strong deterrence

Related reading

Next steps

We are extending the model to price-risk scenarios: if ICNT spikes 10×, should slashed tokens be re-minted at lower prices or kept static? Follow-up analysis coming in January.

Questions or want a tailored unlock simulation? Reach out via the contact form or LinkedIn.

Investor Unlock Schedules in Python - Nine Scenario Simulation

Our new class based engine stress tests nine vesting curves and shows how cliffs, steps and linear unlocks drive circulating supply, sell pressure and valuation.

Investor Unlock Schedules - Nine Scenario Simulation

Introduction

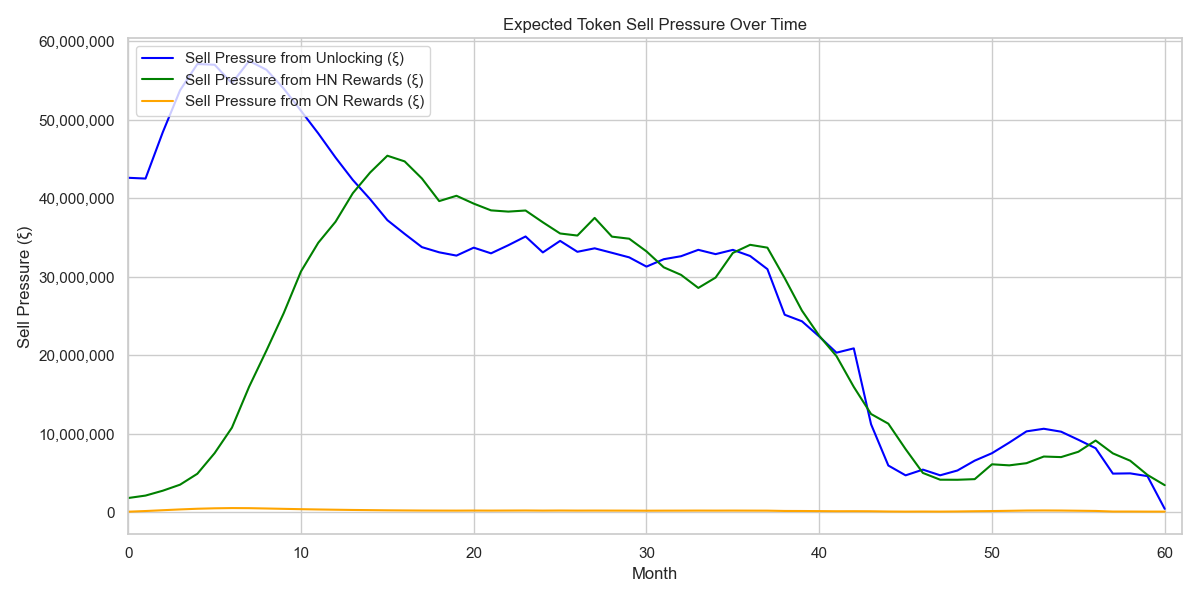

Hook: The wrong unlock curve can dump more tokens on the market than yearly node rewards. We built a fast simulator to find the sweet spot.

Context: Early stage investors hold 25 % of ICNT. How and when those tokens hit secondary exchanges is a key macro driver in the first two years.

Tech stack

- Python 3.11 with Pandas, NumPy and Matplotlib.

- Simulation core packed in two classes:

UnlockSchedule(strategy pattern) andTokenEconomy(state container). - Metrics persisted to Parquet and surfaced in a Streamlit dashboard that autoplots every new run.

- Runs orchestrated with Docker Compose and scheduled via GitHub Actions nightly.

Methodology

- Schedule definitions: linear, cliff, cliff+linear, quarterly step down, exponential decay, reverse cliff, performance gated, aggressive step, long tail.

- Simulation horizon: 60 months. All other token flows held at normal demand baseline.

- Outputs: monthly tables for supply, sell pressure (€), buy pressure (€), price and FDV.

- Visuals: three standard PNGs per run plus auto generated CSV for further BI.

Results and analysis

Key takeaways

- Pure linear unlocks raise average monthly float by ~22 % versus a twelve month cliff.

- Cliff plus linear minimises peak sell pressure yet still delivers 70 % liquidity by year two.

- Step down unlocks create supply shock plateaus which showed higher price volatility in Monte Carlo noise.

Useful links

- Messari - Guide to token vesting and unlock mechanics

- Binance Research - How vesting and unlocks affect token markets

- Streamlit docs - rapid dashboards for Python data apps

- Collateral vs Rewards stress test - September analytics drill down

Related reading

Next steps

Upcoming release will expose a YAML interface so anyone can define unlock curves without touching Python. Contact me if you want an early demo.

Need a bespoke run?

The engine is private but I can run custom scenarios and share the dashboards. Use the contact form or connect on LinkedIn.

Unraveling the Impact of Chart Positioning on App Sales

Explore how app store chart rankings interact with actual sales figures, uncovering nuanced insights that challenge the conventional wisdom of rankings as direct sales drivers. This article draws upon extensive data analysis to dissect these relationships, offering new perspectives on effective app marketing strategies...

Chart Positioning and Its Real Impact on Sales

Introduction

Hook: Did you know that the relationship between app chart rankings and sales is not as straightforward as it seems? While many developers strive for top positions hoping it will boost sales, the underlying dynamics are far more complex.

Overview: This article delves into the intricate interaction between chart positions and app sales, utilizing data-driven insights to unravel myths and reveal truths. We'll explore how different factors such as impressions and user engagements impact sales and rankings, supported by robust statistical analysis and visualization.

Objective: By the end of this read, you'll have a clearer understanding of the real effects of chart rankings on sales, backed by evidence rather than assumption. This insight is crucial for developers and marketers aiming to strategically enhance their app's performance in competitive marketplaces.

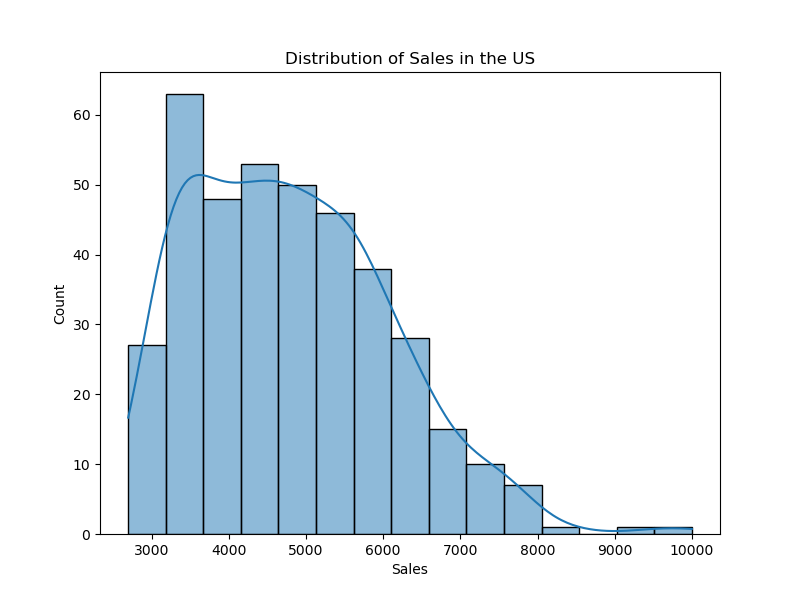

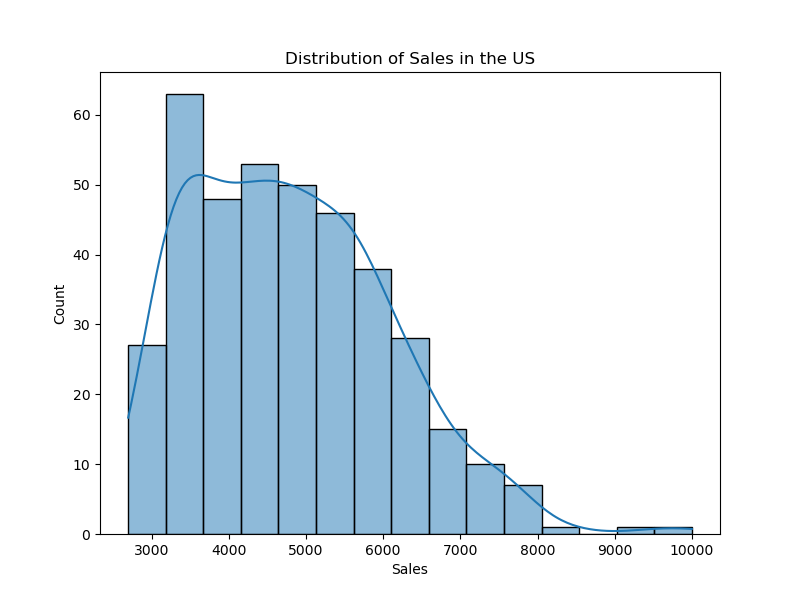

Distribution of Sales in the US

This histogram shows the distribution of sales in the US, highlighting the variability and typical sales volumes that apps experience across different ranking positions.

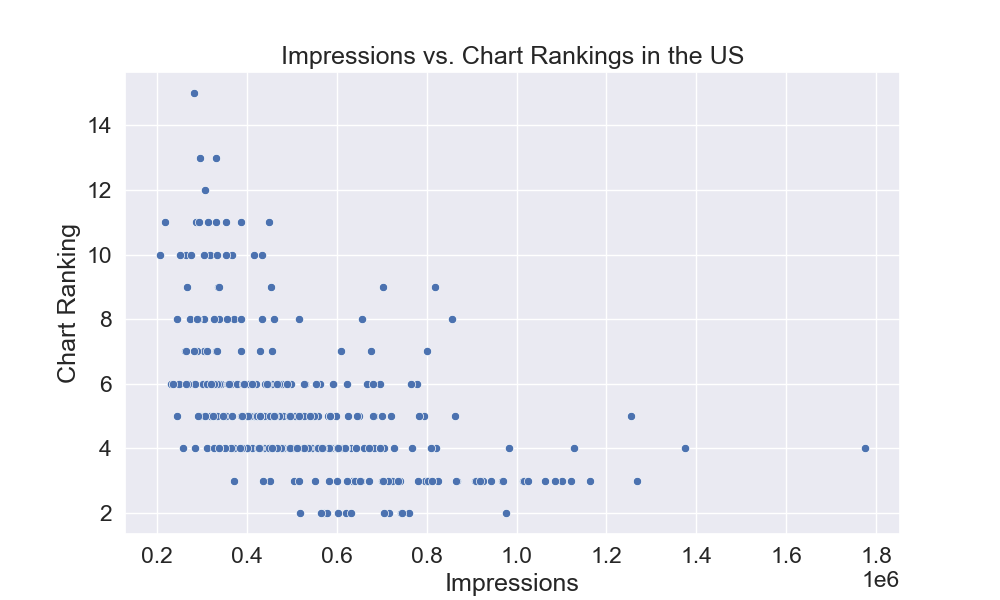

Impressions vs. Chart Rankings in the US

The scatter plot above illustrates the relationship between the number of impressions and chart rankings, providing insights into how visibility might influence or correlate with an app's position in the charts.

Background

Context: The app market is fiercely competitive, where visibility can make or break an app's success. Historically, high chart rankings have been seen as the pinnacle of recognition and a direct driver of sales.

Relevance: In today's data-driven era, our understanding of chart impacts is evolving, making it crucial for developers and marketers to grasp these dynamics fully.

Challenges & Considerations

Problem Statement: One of the principal challenges in our study was to determine the causal relationships between app sales and chart positioning. Initially, it was hypothesized that higher chart positions might directly drive sales; however, this relationship is not straightforward and required extensive analysis.

Due to the dynamic and reciprocal nature of sales and chart rankings influencing each other, distinguishing the cause from the effect posed significant analytical challenges. This complexity necessitated the collection and analysis of longitudinal data spanning several time periods to accurately observe trends, patterns, and potential lag effects between these variables.

Ethical/Legal Considerations: In conducting this analysis, it was crucial to adhere to ethical guidelines concerning data privacy and the responsible use of predictive analytics. All data was handled in compliance with relevant data protection regulations, ensuring that user privacy was safeguarded while deriving meaningful insights.

Methodology

Our analysis utilizes Vector Autoregression (VAR) models and Gradient Boosting to dissect the relationship between sales and chart rankings.

Tools & Technologies: We employed Python for data analysis, using libraries such as pandas for data manipulation, matplotlib and seaborn for visualization, and scikit-learn for implementing machine learning models.

# Essential Python Libraries

import pandas as pd

import matplotlib.pyplot as plt

import seaborn as sns

from sklearn.ensemble import GradientBoostingRegressor

Data Collection and Preparation: Data was collected from multiple sources, organized by country, and cleaned for consistency and accuracy.

# Data loading example

df = pd.read_csv('category-ranking-history-us.csv', parse_dates=['DateTime'])

df.set_index('DateTime', inplace=True)

Data Analysis: We combined sales data with app ranking data, applied machine learning models to identify patterns, and used statistical methods to validate our findings.

# Example of data merging and preparation for VAR model

final_df = sales_df.join(rankings_df.set_index('Date'), how='inner')

Visualizing Data: We utilized plots to explore data distributions and relationships between variables, which helped in understanding the impact of various factors on app rankings and sales.

# Plotting sales over time

plt.figure(figsize=(10, 6))

sns.lineplot(data=final_df, x='Date', y='sales_US')

plt.title('Sales Over Time in the US')

plt.savefig('sales_over_time_us.png')

Modeling: VAR models were applied to analyze the time-series data, and Gradient Boosting models were used to assess the importance of various features.

# Setting up a VAR model

from statsmodels.tsa.vector_ar.var_model import VAR

model = VAR(endog=data[['sales', 'rankings']])

model_fit = model.fit(maxlags=15, ic='aic')

Tips & Best Practices: Ensure data quality by thorough cleansing and consider external factors such as market trends when analyzing app performance. Regularly update models and techniques to adapt to new data and insights.

Example of Advanced Analysis: We also explored the cross-correlation between rankings and sales to understand how changes in one could potentially affect the other over different time lags.

# Cross-correlation analysis

ccf_values = sm.tsa.stattools.ccf(rankings, sales, adjusted=True)[:max_lag]

Results

Findings: Our analysis uncovered detailed interactions between various app marketplace metrics such as sales, downloads, page views, impressions, and rankings. These interactions are quantified using advanced statistical methods to show how changes in one area can influence outcomes in another.

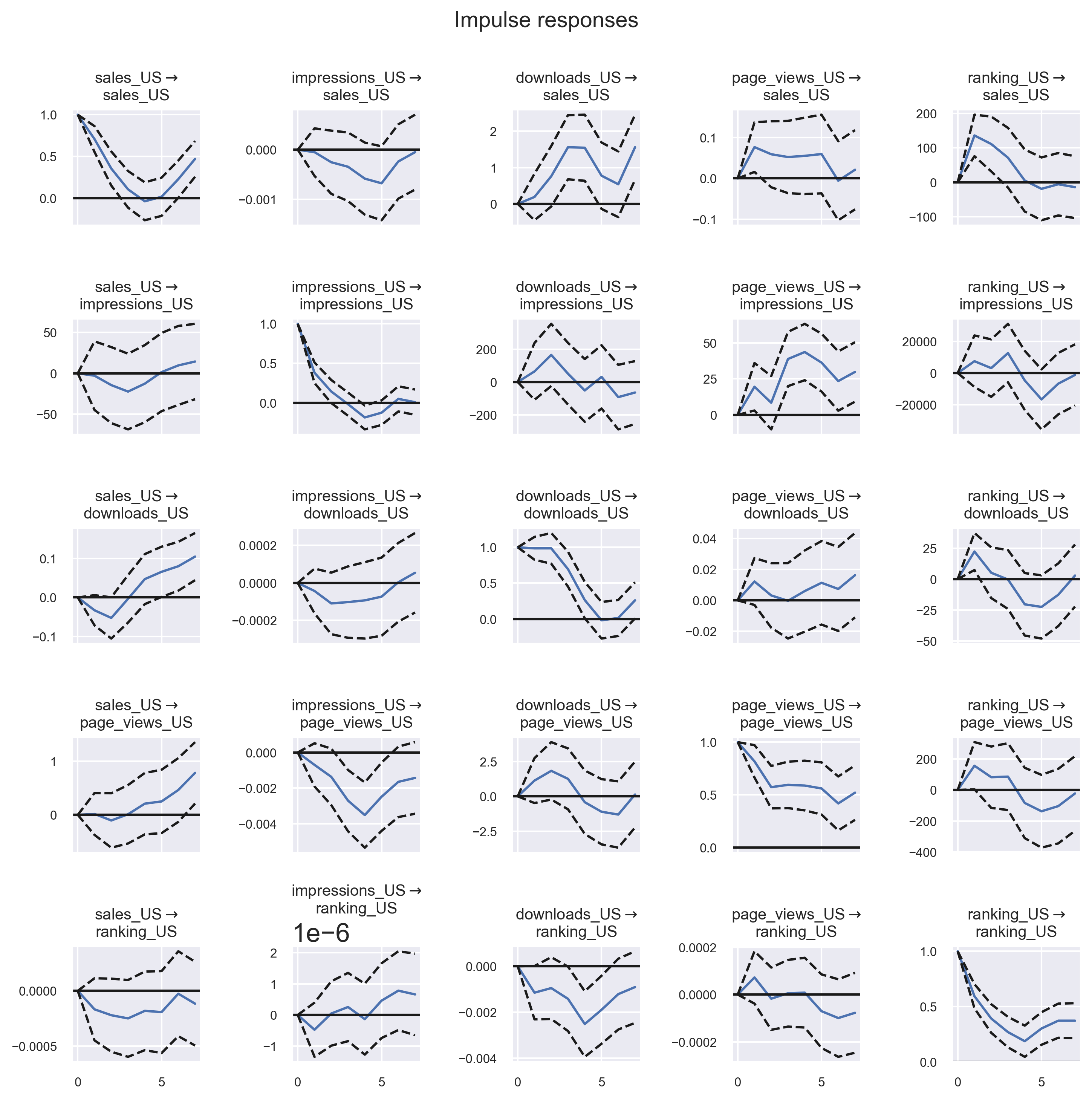

Impulse Response Analysis

The Impulse Response Functions (IRF) chart below shows the effect of a one-time change in one metric (like sales) on the future values of other metrics over time. For instance, an increase in sales today might affect the number of downloads and page views in the following days. Each line in the chart represents a different type of interaction, helping us see the duration and magnitude of impacts.

This analysis is crucial for understanding the temporal dynamics within the app market, indicating that actions taken today could have prolonged effects, thereby informing more strategic decision-making.

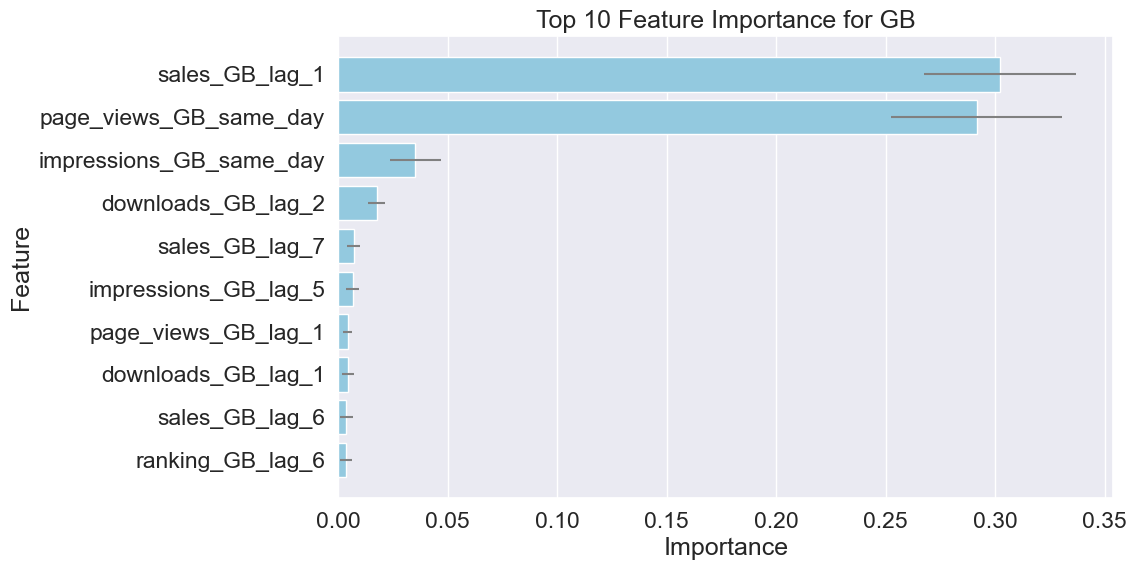

Feature Importance for Sales in Great Britain (GB)

This feature importance chart for Great Britain highlights which variables most significantly predict sales. The 'sales_GB_lag_1' at the top suggests that sales from the previous day are highly predictive of sales today, indicating momentum. 'page_views_GB_same_day' suggests that the number of page views on the same day also strongly influences sales.

Understanding these drivers helps marketers focus on what really affects sales, such as enhancing same-day visibility and maintaining sales momentum.

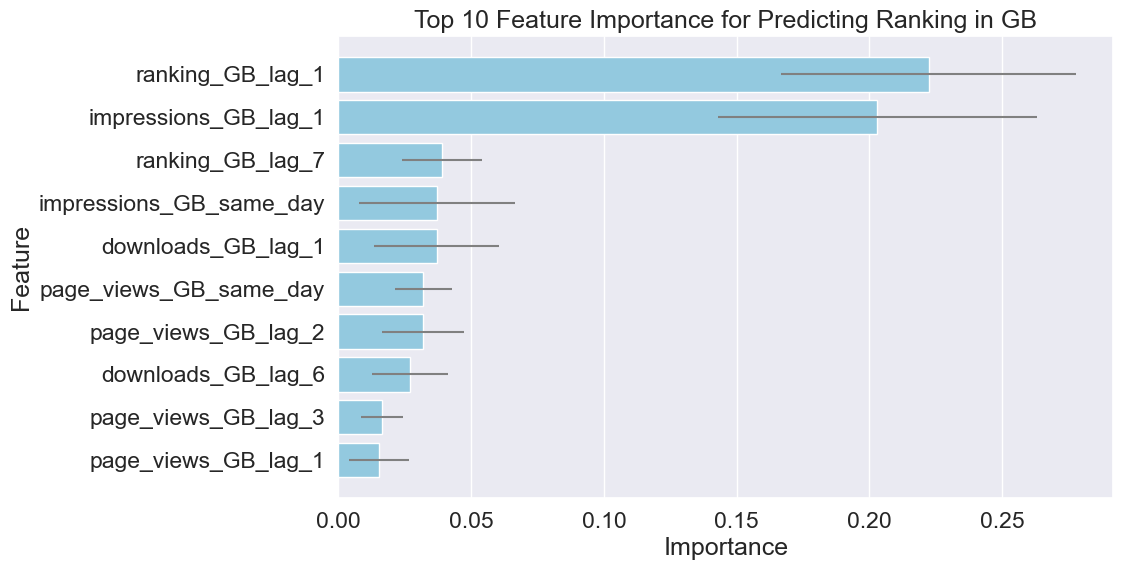

Feature Importance for Predicting Rankings in Great Britain (GB)

The analysis of factors affecting rankings in Great Britain shows that both immediate and slightly delayed metrics (like 'impressions_GB_same_day' and 'impressions_GB_lag_1') are crucial. This reflects the fast-moving nature of app popularity, where today's user engagement directly boosts tomorrow's app ranking.

Strategically, this suggests that increasing visibility and interaction with users can provide immediate benefits in rankings, highlighting the importance of daily marketing efforts.

Analysis: The results validate the interconnectedness of sales, impressions, and rankings, suggesting a bidirectional relationship where not only do rankings influence sales and visibility, but these factors also impact rankings. This mutual influence highlights strategic points for enhancing app performance in competitive markets.

Conclusion

Summary: Our comprehensive analysis has highlighted the complex interplay between app rankings, sales, impressions, and user engagements within digital marketplaces. Through advanced statistical methods such as Impulse Response Functions and machine learning techniques like Gradient Boosting, we've demonstrated how various factors dynamically influence each other over time.

Implications: The findings underscore the importance of a multifaceted approach to app marketing and development. Rather than focusing solely on improving chart rankings, developers and marketers should consider a broader strategy that enhances user engagement and optimizes visibility to drive sales. Our analysis shows that actions taken today can have sustained effects, influencing future performance and necessitating proactive, data-informed decision-making.

Future Directions: Further research could investigate the effects of marketing campaigns and user reviews on app rankings and sales, offering deeper insights into consumer behavior. Additionally, exploring these dynamics across different platforms and regions could yield tailored strategies that cater to specific market conditions and user preferences.

To continue advancing in this competitive industry, embracing a holistic view of data analytics and continuous innovation in marketing strategies will be key.

Call to Action

As we conclude our journey into the analytical depths of chart positioning and app performance, it's your turn to explore these concepts in your projects. Whether you're a seasoned data scientist or just starting out, your insights and experiences are valuable to the community:

- Engage: Share your thoughts or queries about this analysis on social media or through email. Have you conducted similar studies? What challenges have you faced, and what insights have you uncovered? We'd love to hear from you and foster a community of shared learning.

- Share: If you found this article helpful, consider sharing it within your network or social media. Spreading knowledge helps us all grow together.

- Experiment: Use the methodologies and code snippets provided to explore your own datasets. Whether you're interested in app market dynamics or another data-driven topic, the tools and techniques discussed here can offer valuable insights.

Links for Further Reading and Resources

Enhance your understanding and skills with these resources:

- Vector Autoregression (VAR) - Comprehensive Guide with Examples in Python: A detailed guide to understanding and implementing VAR in Python.

- Vector Autoregressions in statsmodels: Explore more about VAR models using the statsmodels library.

- Develop Your First XGBoost Model in Python: A beginner's guide to understanding and applying XGBoost in Python.

- Official XGBoost Documentation: Dive into the official XGBoost documentation to explore comprehensive resources on using XGBoost for scalable and efficient modeling.

Related reading

Revolutionizing Forecasting: The Power of Prophet in Data Science

Delve into the capabilities of Prophet for innovative forecasting. This comprehensive guide covers the integration of SQL data retrieval, Prophet modeling, and Python analysis to forecast future trends with precision...

Advanced Forecasting with Prophet

Introduction

Hook: Ever wondered how advanced forecasting models like Prophet predict future trends with remarkable accuracy?

Overview: This piece unveils the power of Prophet, integrated with SQL and Python, to revolutionize forecasting in data science. Dive deep into the sophisticated mechanics behind one of today's most influential forecasting tools.

Objective: By the end of this article, you'll understand how to harness Prophet for your predictive analyses and decision-making processes. We'll explore the methodological framework, dissect real-world data scenarios, and discuss how you can implement this powerful tool to optimize your strategic outcomes.

Background

The Rise of Forecasting Tools: In today's dynamic market environments, the ability to predict future trends accurately is a key competitive advantage. Over the years, businesses have leveraged various statistical methods and machine learning models to forecast sales, user growth, and market trends.

Introduction of Prophet: Developed by Facebook in 2017, Prophet was designed to address specific issues with existing forecasting tools that were not well suited to the business forecast tasks commonly faced by industry practitioners. Prophet stands out by allowing for quick model adjustments that can accommodate seasonal effects, holidays, and other recurring events, making it particularly useful for data with strong seasonal patterns or those impacted by scheduled events.

Prophet's Approach: Unlike traditional time series forecasting models, Prophet is robust to missing data and shifts in trend, and it handles outliers well. This makes it an invaluable tool for analysts who need to generate reliable forecasts without getting bogged down by the complexities of tuning and re-tuning their models when new data becomes available.

Challenges & Considerations

Complex Data Preparation: Effective use of Prophet requires meticulous data preparation. Handling missing data, accounting for outliers, and aggregating data at the correct time intervals are essential for accurate forecasts. Ensuring data quality and proper formatting is foundational and can significantly impact model performance.

Sufficient Historical Data: One of the fundamental challenges in forecasting is having a robust dataset. Attempting to predict future trends, such as annual sales, with only a few weeks of data can lead to highly inaccurate predictions. Forecasting models like Prophet require extensive historical data to identify and learn patterns effectively.

Model Tuning and External Events: Tuning Prophet involves setting parameters to accurately reflect underlying patterns, such as seasonality and trends, without overfitting. Incorporating external events like new product releases or market disruptions requires manual intervention and assumptions, as Prophet does not natively predict impacts from unforeseen or new variables.

Operational Integration and Pipeline Challenges: Integrating Prophet forecasts into business operations involves building robust data pipelines to automate forecast updates, manage dependencies, and handle computational demands. Ensuring these systems are scalable and reliable presents a significant challenge, especially when aligning them with real-time business processes.

Methodology

This section outlines the comprehensive approach taken to forecast game sales, starting from data retrieval to predictive analysis using Prophet. The process includes advanced data manipulation techniques to refine the dataset for more targeted insights.

Data Retrieval and Preprocessing

Data is first extracted using a refined SQL query that targets sales data across various dimensions. Special emphasis is placed on the top 10 countries, games, and platforms, identifying key players and trends:

-- SQL query to retrieve data

SELECT

country,

game,

platform,

date,

sales

FROM

sales_data

WHERE

date BETWEEN '2022-01-01' AND '2023-12-31';

Following retrieval, data preprocessing includes cleansing, normalizing dates, and restructuring columns to fit Prophet's requirements. Data for countries, games, and platforms outside the top 10 are aggregated into an 'Other' category to maintain focus while preserving data integrity:

import pandas as pd

# Load and clean data

data = pd.read_sql(query, connection)

data['date'] = pd.to_datetime(data['date'])

data = data.fillna(method='ffill')

# Aggregating outside top 10

top_categories = data.groupby(['country', 'game', 'platform']).sum().nlargest(10, 'sales')

data['category'] = data.apply(lambda row: 'Other' if (row['country'], row['game'], row['platform']) not in top_categories else row['country']+'_'+row['game']+'_'+row['platform'], axis=1)

# Prepare data for Prophet

data_prophet = data.rename(columns={'date': 'ds', 'sales': 'y'}).groupby('ds').aggregate({'y': 'sum'}).reset_index()

Implementing Prophet for Forecasting

With the data preprocessed and segmented, Prophet is employed to model and forecast sales. The model is configured to accommodate seasonal variations and holiday effects, which are particularly significant in game sales:

from fbprophet import Prophet

# Configure the Prophet model

model = Prophet(yearly_seasonality=True, weekly_seasonality=False, daily_seasonality=False)

model.add_country_holidays(country_name='US')

# Model fitting and forecasting

model.fit(data_prophet)

future_dates = model.make_future_dataframe(periods=365)

forecast = model.predict(future_dates)

Analysis of Forecasting Results

The forecasting results are analyzed to determine the accuracy and effectiveness of the model. Visualizations such as trend lines and year-over-year growth provide insights into potential future performance:

# Visualizing the results

fig1 = model.plot(forecast)

fig2 = model.plot_components(forecast)

This methodological approach not only predicts future sales but also identifies key trends and variables influencing market dynamics.

Results

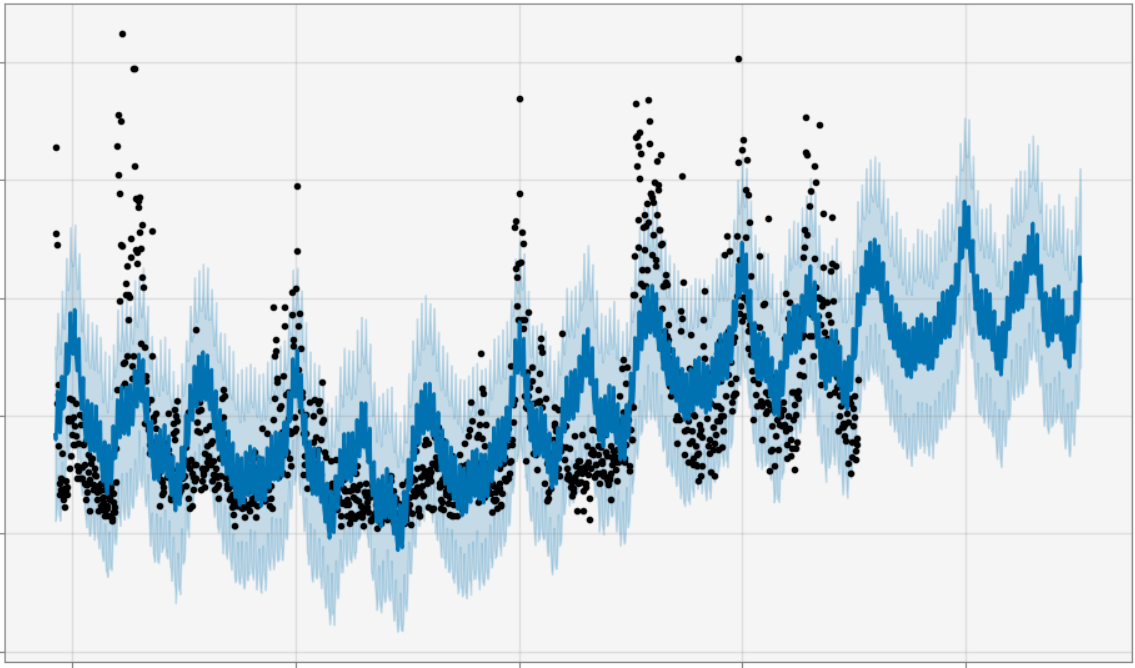

The forecasting results, both individual and aggregated, highlight the complexities of predicting game revenues over time. Notably, the models exhibit triple modality, where three distinct peaks in data frequency can be observed. This effect poses challenges in visualization, often leading to a 'line spaghetti' phenomenon when plotting data across different timescales.

Individual Forecast Analysis

This chart depicts individual forecasts with a focus on capturing nuances of periodic trends and fluctuations. Despite the use of a 90% confidence interval, some actuals fall outside the predicted ranges, underscoring limitations in capturing unforeseen events like cultural festivals or in-game promotions.

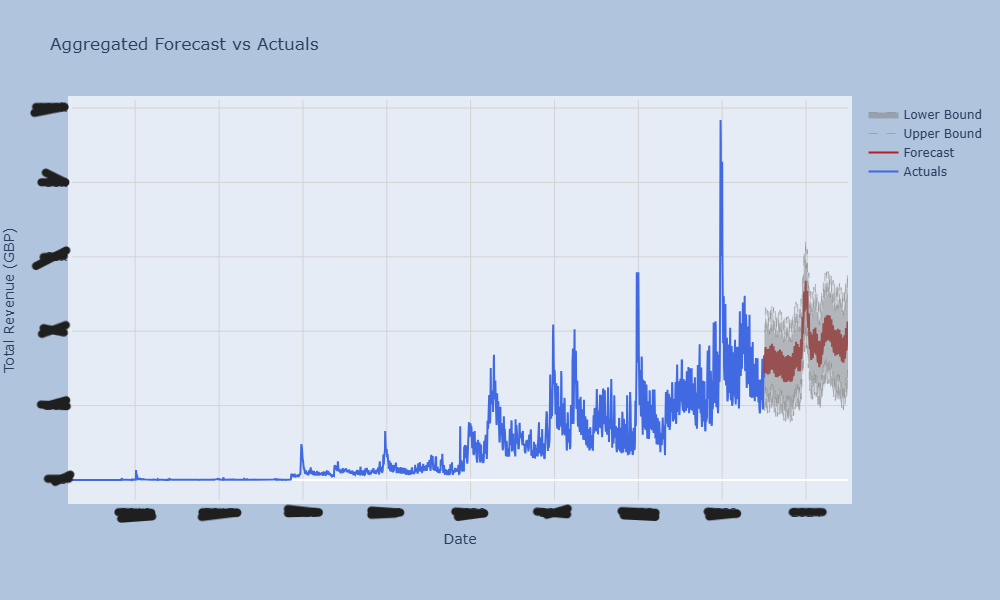

Aggregated Forecast vs. Actuals

The aggregated forecast comparison illustrates the overall effectiveness of the forecasting model against actual revenues, revealing insights into the model's predictive accuracy and the impact of external variables not accounted for in the forecast.

Conclusion

Summary: This study's exploration with Prophet forecasting models demonstrates their robust capabilities in predicting gaming revenues while also identifying the challenges posed by unforeseen market events and promotions.

Implications: The insights derived from these models are applicable across various industries, offering a valuable tool for predicting trends and adjusting strategies in real-time. Particularly, the analysis of the competitive impact of rival game releases provides a nuanced view of market dynamics.

Future Directions: Further research could enhance the forecasting accuracy by incorporating a promotions and cultural events calendar. Specifically, integrating data on public holidays, major cultural events, or global phenomena like COVID-19 could significantly refine predictions. Additionally, investigating the dual impact of competing products over time - initially cooperative and later competitive - could yield deeper insights into consumer behavior and market trends.

Engage With Us

Share Your Thoughts: Have you used Prophet for your forecasting needs? We'd love to hear about your experiences and the unique challenges you faced. Share your insights and how they have impacted your business or research.

Share This Analysis: If you found this exploration into forecasting with Prophet insightful, please consider sharing it with your peers. Broadening our community's understanding of these tools can lead to innovative uses and improvements.

Continue Learning: Dive deeper into forecasting by exploring these resources:

- Prophet Quick Start Guide

- Original Research Paper on Prophet

- Article on Understanding Time Series Forecasting with Prophet